The Internet Should Be a Public Good

The Internet was built by public institutions — so why is it controlled by private corporations?

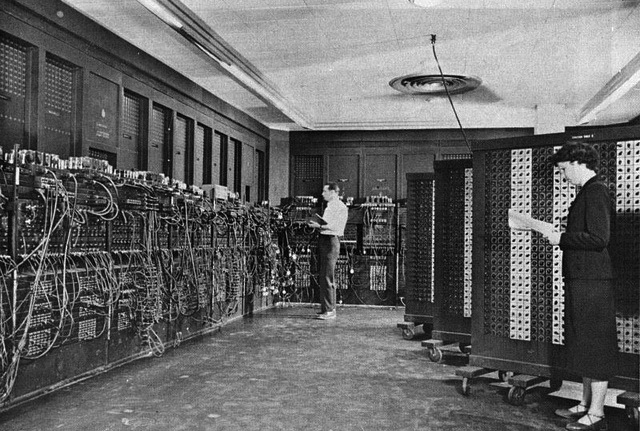

ENIAC, the first general-purpose computer. US Army

On October 1, the Internet will change and no one will notice. This invisible transformation will affect the all-important component that makes the Internet usable: the Domain Name System (DNS). When you type the name of a website into your browser, DNS is what converts that name into the string of numbers that specify the website’s actual location. Like a phone book, DNS matches names that are meaningful to us to numbers that aren’t.

For years, the US government has controlled DNS. But in October, the system will become the responsibility of a Los Angeles-based nonprofit called the Internet Corporation for Assigned Names and Numbers (ICANN).

ICANN has actually already been managing DNS since the late 1990s under a contract with the Commerce Department. What’s new is that ICANN will have independent authority over DNS, on a new “multi-stakeholder” model that’s supposed to make Internet governance more international.

The actual impact is likely to be small. The trademark protection measures that police DNS on behalf of corporations will remain in place, for instance. And the fact that ICANN is located in Los Angeles and incorporated under US law means that the US government will continue to exercise influence, if somewhat less directly.

But the symbolic significance is huge. The October handover marks the last chapter in the privatization of the Internet. It concludes a process that began in the 1990s, when the US government privatized a network built at enormous public expense.

In return, the government demanded nothing: no compensation, and no constraints or conditions over how the Internet would take shape.

There was nothing inevitable about this outcome — it reflected an ideological choice, not a technical necessity. Instead of confronting critical issues of popular oversight and access, privatization precluded the possibility of putting the Internet on a more democratic path.

But the fight is not over. The upcoming ICANN handoff offers an opportunity to revisit the largely unknown story of how privatization happened — and how we might begin to reverse it, by reclaiming the Internet as a public good.

The Internet’s Public Origins

Silicon Valley often likes to pretend that innovation is the result of entrepreneurs tinkering in garages. But most of the innovation on which Silicon Valley depends comes from government research, for the simple reason that the public sector can afford to take risks that the private sector can’t.

It’s precisely the insulation from market forces that enables government to finance the long-term scientific labor that ends up producing many of the most profitable inventions.

This is particularly true of the Internet. The Internet was such a radical and unlikely idea that only decades of public funding and planning could bring it into existence. Not only did the basic technology have to be invented, but the infrastructure had to be built, specialists had to be trained, and contractors had to be staffed, funded, and in some cases, directly spun off from government agencies.

The Internet is sometimes compared to the interstate highway system, another major public project. But as the legal activist Nathan Newman points out, the comparison only makes sense if the government “had first imagined the possibility of cars, subsidized the invention of the auto industry, funded the technology of concrete and tar, and built the whole initial system.”

The Cold War provided the pretext for this ambitious undertaking. Nothing loosened the purse strings of American politicians quite like the fear of falling behind the Soviet Union. This fear spiked sharply in 1957, when the Soviets put the first satellite into space. The Sputnik launch produced a genuine sense of crisis in the American establishment, and led to a substantial increase in federal research funding.

One consequence was the creation of the Advanced Research Projects Agency (ARPA), which would later change its name to the Defense Advanced Research Projects Agency (DARPA).

ARPA became the R&D arm of the Defense Department. Instead of centralizing research in government labs, ARPA took a more distributed approach, cultivating a community of contractors from both academia and the private sector.

In the early 1960s, ARPA began investing heavily in computing, building big mainframes at universities and other research sites. But even for an agency as generously funded as ARPA, this spending spree wasn’t sustainable. In those days, a computer cost hundreds of thousands, if not millions, of dollars. So ARPA came up with a way to share its computing resources more efficiently among its contractors: it built a network.

This network was ARPANET, and it laid the foundation for the Internet. ARPANET linked computers through an experimental technology called packet-switching, which involved breaking messages down into small chunks called “packets,” routing them through a maze of switches, and reassembling them on the other end.

Today, this is the mechanism that moves data across the Internet, but at the time, the telecom industry considered it absurdly impractical. Years earlier, the Air Force had tried to persuade AT&T to build such a network, without success. ARPA even offered ARPANET to AT&T after it was up and running, preferring to buy time on the network instead of managing it themselves.

Given the chance to acquire the most sophisticated computer network in the world, AT&T refused. The executives simply couldn’t see the money in it.

Their shortsightedness was fortunate for the rest of us. Under public management, ARPANET flourished. Government control gave the network two major advantages.

The first was money: ARPA could pour cash into the system without having to worry about profitability. The agency commissioned pioneering research from the country’s most talented computer scientists at a scale that would’ve been suicidal for a private corporation.

And, just as crucially, ARPA enforced an open-source ethic that encouraged collaboration and experimentation. The contractors who contributed to ARPANET had to share the source code of their creations, or risk losing their contracts. This catalyzed scientific creativity, as researchers from a range of different institutions could refine and expand on each other’s work without living in fear of intellectual property law.

The most important innovation that resulted was the Internet protocols, which first emerged in the mid 1970s. These protocols made it possible for ARPANET to evolve into the Internet, by providing a common language that let very different networks talk to one another.

The open and nonproprietary nature of the Internet greatly enhanced its usefulness. It promised a single interoperable standard for digital communication: a universal medium, rather than a patchwork of incompatible commercial dialects.

Promoted by ARPA and embraced by researchers, the Internet grew quickly. Its popularity soon led scientists from outside the military and ARPA’s select circle of contractors to demand access.

In response, the National Science Foundation (NSF) undertook a series of initiatives aimed at bringing the Internet to nearly every university in the country. These culminated in NSFNET, a national network that became the new “backbone” of the Internet.

The backbone was a collection of cables and computers that formed the Internet’s main artery. It resembled a river: data flowed from one end to another, feeding tributaries, which themselves branched into smaller and smaller streams.

These streams served individual users, who never touched the backbone directly. If they sent data to another part of the Internet, it would travel up the chain of tributaries to the backbone, then down another chain, until it reached the stream that served the recipient.

One lesson of this model is that the Internet needs lots of networks at its edges. The river is useless without tributaries that extend its reach. Which is why the NSF, to ensure the broadest possible connectivity, also subsidized a number of regional networks that linked universities and other participating institutions to the NSFNET backbone.

All this wasn’t cheap, but it worked. Scholars Jay P. Kesan and Rajiv C. Shah have estimated that the NSFNET program cost over $200 million. Other public sources, including state governments, state-supported universities, and federal agencies likely contributed another $2 billion on networking with the NSFNET.

Thanks to this avalanche of public cash, a cutting-edge communications technology incubated by ARPA became widely available to American researchers by the end of the 1980s.

The Road to Privatization

But by the early nineties, the Internet was becoming a victim of its own success. Congestion plagued the network, and whenever the NSF upgraded it, more people piled on.

In 1988, users sent fewer than a million packets a month. By 1992, they were sending 150 billion. Just as new highways produce more traffic, the NSF’s improvements only stoked demand, overloading the system.

Clearly, people liked the Internet. And these numbers would’ve been even higher if the NSF had imposed fewer restrictions on its users. The NSFNET’s Acceptable Use Policy (AUP) banned commercial traffic, preserving the network for research and education purposes only. The NSF considered this a political necessity, since Congress might cut funding if taxpayer dollars were seen to be subsidizing industry.

In practice, the AUP was largely unenforceable, as companies regularly used the NSFNET. And more broadly, the private sector had been making money off the Internet for decades, both as contractors and as beneficiaries of software, hardware, infrastructure, and engineering talent developed with public funds.

The AUP may have been a legal fiction, but it did have an effect. By formally excluding commercial activity, it spawned a parallel system of private networks. By the early 1990s, a variety of commercial providers had sprung up across the country, offering digital services with no restrictions on the kind of traffic they would carry.

Most of these networks traced their origins to government funding, and enlisted ARPA veterans for their technical expertise. But whatever their advantages, the commercial networks were prohibited by the AUP from connecting to the Internet, which inevitably limited their value.

The Internet had thrived under public ownership, but it was reaching a breaking point. Skyrocketing demand from researchers strained the network, while the AUP prevented it from reaching an even wider audience.

These weren’t easy problems to solve. Opening the Internet to everyone, and building the capacity to accommodate them, presented significant political and technical challenges.

NSFNET director Stephen Wolff came to see privatization as the answer. He believed ceding the Internet to the private sector would bring two big benefits: It would ease congestion by sparking an influx of new investment, and it would abolish the AUP, enabling commercial providers to integrate their networks with NSFNET. Liberated from government control, the Internet could finally become a mass medium.

The first step took place in 1991. A few years earlier, the NSF had awarded the contract for operating its network to a consortium of Michigan universities called Merit, in partnership with IBM and MCI. This group had significantly underbid, sensing a business opportunity. In 1991, they decided to cash in, creating a for-profit subsidiary that started selling commercial access to NSFNET with Wolff’s blessing.

The move enraged the rest of the networking industry. Companies correctly accused NSF of cutting a backroom deal to grant its contractors a commercial monopoly, and raised enough hell to bring about congressional hearings in 1992.

These hearings didn’t dispute the desirability of privatization, only its terms. Now that Wolff had put privatization in motion, the other commercial providers simply wanted a piece of the action.

One of their chief executives, William Schrader, testified that NSF’s actions were akin to “giving a federal park to K-mart.” The solution wasn’t to preserve the park, however, but to carve it up into multiple K-marts.

The hearings forced the NSF to agree to a greater industry role in designing the future of the network. Predictably, this produced even faster and deeper privatization. Previously, the NSF had considered restructuring NSFNET to allow more contractors to run it.

By 1993, in response to industry input, the NSF had decided on the far more radical step of eliminating NSFNET altogether. Instead of one national backbone, there would be several, all owned and operated by commercial providers.

Industry leaders claimed the redesign ensured a “level playing field.” More accurately, the field remained tilted, but open to a few more players. If the old architecture of the Internet had favored monopoly, the new one would be tailor-made for oligopoly.

There weren’t that many companies that had consolidated enough infrastructure to operate a backbone. Five, to be exact. NSF wasn’t opening the Internet to competition so much as transferring it to a small handful of corporations waiting in the wings.

Strikingly, this transfer came with no conditions. There would be no federal oversight of the new Internet backbones, and no rules governing how the commercial providers ran their infrastructure.

There would also be no more subsidies for the nonprofit regional networks that had wired campuses and communities for Internet in the NSFNET days. They were soon acquired or bankrupted by for-profit ventures. In 1995, the NSF terminated NSFNET. Within the space of a few short years, privatization was complete.

The rapid privatization of the Internet provoked no opposition and little debate. While Wolff led the way, he was acting from a broad ideological consensus.

The free-market triumphalism of the 1990s, and the intensely deregulatory political climate fostered by Bill Clinton’s Democrats and Newt Gingrich’s Republicans, framed full private ownership of the Internet as both beneficial and inevitable.

The collapse of the Soviet Union strengthened this view, as the Cold War rationale for more robust public planning disappeared. Finally, the depth of industry influence over the process guaranteed that privatization would take an especially extreme form.

Perhaps the most decisive factor in the giveaway was the absence of an organized campaign demanding an alternative. Such a movement might have proposed a range of measures designed to popularize the Internet without completely privatizing it. Instead of abandoning the nonprofit regional networks, the government could have expanded them.

Funded with fees extracted from the commercial backbone providers, these networks would enable the government to guarantee high-speed, low-cost Internet access to all Americans as a social right. Meanwhile, the FCC could regulate the backbones, setting the rates they charge one another for carrying Internet traffic and overseeing them as a public utility.

But enacting even a fraction of these policies would have required a popular mobilization, and the Internet was still relatively obscure in the early nineties, largely confined to academics and specialists. It was hard to build a coalition around democratizing a technology that most people didn’t even know existed.

In this landscape privatization scored a victory so complete that it became nearly invisible, and quietly revolutionized the technology that would soon revolutionize the world.

Reclaiming the People’s Platform

Twenty years later, the Internet has grown tremendously, but the ownership structure of its core infrastructure is mostly the same. In 1995, five companies owned the Internet backbone. Today, there are somewhere between seven and twelve major backbone providers in the United States, depending on how you count, and more overseas.

While a long chain of mergers and acquisitions has led to rebranding and reorganization, many of the biggest American companies have links to the original oligopoly, such as AT&T, Cogent, Sprint, and Verizon.

The terms of privatization have made it easy for incumbents to protect their position. To form a unified Internet, the backbones must interconnect with each other and with smaller providers. This is how traffic travels from one part of the Internet to another. Yet because the government specified no interconnection policy when it privatized the Internet, the backbones can broker whatever arrangement they want.

Typically, they let each other interconnect for free, because it works to their mutual benefit, but charge smaller providers for carrying traffic. These contracts aren’t just unregulated — they’re usually secret. Negotiated behind closed doors with the help of nondisclosure agreements, they ensure that the deep workings of the Internet are not only controlled by big corporations, but hidden from public view.

More recently, new concentrations of power have emerged. The backbone isn’t the only piece of the Internet that’s held by relatively few hands. Today, more than half of the data flowing to American users at peak hours comes from only thirty companies, with Netflix occupying an especially large chunk.

Similarly, telecom and cable giants like Comcast, Verizon, and Time Warner Cable dominate the market for broadband service. These two industries have transformed the architecture of the Internet by building shortcuts to each other’s networks, bypassing the backbone. Content providers like Netflix now pipe their video directly to broadband providers like Comcast, avoiding a circuitous route through the bowels of the Internet.

These deals have triggered a firestorm of controversy, and helped produce the first tentative steps towards Internet regulation in the United States. In 2015, the FCC announced its strongest ruling to date enforcing “net neutrality,” the principle that Internet service providers should treat all data the same way, regardless of whether it comes from Netflix or someone’s blog.

In practice, net neutrality is impossible given the current structure of the Internet. But as a rallying cry, it has focused significant public attention on corporate control of the Internet, and produced real victories.

The FCC ruling reclassified broadband providers as “common carriers,” which makes them subject to telecom regulation for the first time. And the agency has promised to use these new powers to ban broadband companies from blocking traffic to particular sites, slowing customer speeds, and accepting “paid prioritization” from content providers.

The FCC decision is a good start, but it doesn’t go nearly far enough. It explicitly rejects “prescriptive, industry-wide rate regulation,” and exempts broadband providers from many of the provisions of the New Deal-era Communications Act of 1934.

It also focuses on broadband, neglecting the Internet backbone. But the decision is a wedge that can be widened, especially because the FCC has left open many of the specifics around its implementation.

Another promising front is municipal broadband. In 2010, the city-owned power utility in Chattanooga, Tennessee began selling affordable high-speed Internet service to residents. Using a fiber-optic network built partly with federal stimulus funds, the utility offers some of the fastest residential Internet speeds in the world.

The broadband industry has responded forcefully, lobbying state legislatures to ban or limit similar experiments. But the success of the Chattanooga model has inspired movements for municipal broadband in several other cities, including Seattle, where socialist city councilwoman Kshama Sawant has long championed the idea.

These may seem like small steps, but they point toward the possibility of building a popular movement to reverse privatization. This involves not only agitating for expanded FCC oversight and publicly owned broadband utilities, but changing the rhetoric around Internet reform.

One of the more damaging obsessions among Internet reformers is the notion that greater competition will democratize the Internet. The Internet requires a lot of infrastructure to run. Slicing the big corporations that own this infrastructure into smaller and smaller companies in the hope that eventually the market will kick in to create better outcomes is misguided.

Instead of trying to escape the bigness of the Internet, we should embrace it — and bring it under democratic control. This means replacing private providers with public alternatives where it’s feasible, and regulating them where it’s not.

There is nothing in the pipes or protocols of the Internet that obliges it to produce immense concentrations of corporate power. This is a political choice, and we can choose differently.